Hi everybody!

My name’s Lance, and this will be my learning diary for COMP 650: Social Computing. Whether you’re taking the course with me, or whether you’re a friend or family member interested in sharing the journey, or whether you’re a stranger led here by a combination of a hyperlink and a twist of fate, you are welcome.

I am not the only person who gets to talk here–the comments are open–but please remember that basic Internet decorum does apply here. Be polite and respectful of our differing points of view, don’t say anything you’re not willing to have others examine and dissect, and generally try to follow a social version of the Robustness Principle: “Be conservative in what you do, be liberal in what you accept from others.” [1]

Alternatively, I will accept a slightly modified formulation of the tenth pledge: “Let’s all have fun and [learn] together!” [2]

I’ll see you in the comments!

[1] Postel, J. (Ed.). (1980, January). Transmission control protocol. IETF. https://doi.org/10.17487/RFC0761.

[2] Ōdate, K. (Writer), & Hanada, J. (Director). (2014, April 9). Beginner (Season 1, Episode 1) [TV series episode]. In Y. Hayashi, S. Tanaka, M. Shimizu, S. Fukao, & A. Shimizu (Producers), No Game No Life. Madhouse

-

Welcome to my COMP 650 learning diary!

-

Week 13 Reflections

I did correctly represent the times I had available in which to attend the Artifact presentation. And yet, we ended up with the presentation taking place on Friday afternoon anyway! This was an excellent, excellent way for everything to turn out for me, as it gave me a little bit of extra time to get everything ready(ish) for the presentation.

Originally, I planned to have a “proper” presentation, by which I mean a Powerpoint with slides and bullet points and everything else… but in all honesty, it just wasn’t happening. Rather than trying to make it “official” and business-like, I chose to simply tell a story–I know the community I was building for, I know the background, I have a relatively good understanding of the people involved and the various conditions influencing the course of events… telling the story and demonstrating the Artifact I created just felt more natural and more honest to the material.

And I think the presentation itself went relatively well. I talked too much and ran into the time I had planned to allow for questions, but at least I did manage to finish under 20 minutes and had a few minutes for questions at all. I classify that as a success, overall.

This was the working setup of TediCross, using two test bots, a test group on Telegram, and a test server on Discord. I also showed my configuration file during the presentation, but I won’t replicate it here–it’s largely default, and I don’t really need to share bot tokens and channel IDs with the wider Internet!

These ended up being my notes for the presentation. Yeah… far, far from a Powerpoint presentation, I just wrote down my reminder points and spoke from the heart, as it were.

The other Artifact presentations were also interesting. Chi presented a website offering e-portfolios, and Damian presented a vibe-coded social networking website for gardeners. I even made a post about “salting the earth” on Damian’s site while he was presenting, just to see how it worked. (I wanted so badly to suggest a little badging system to designate longevity of membership… everything from “bud” to “new leaf” to “shoot”, etc… but mostly so that the system administrator could be designated “root.” I didn’t want it to be all about me cracking jokes, though.)

And so I’m now at the end of COMP650: Social Computing. As I sit and reflect back on the entire term… while it’s a relatively small sample space so far, I feel like I’ve done the most learning in this course. It’s not the sheer amount of reading (though that has been very high!), but the reading has been… less targeted, if that makes sense? Like, COMP695 has also had a substantial amount of reading, but it’s been very tightly focused on extracting bits and pieces of techniques and methods from the readings. In contrast, the readings here have been much wider and not targeted on specific points. Rather, they’ve been based on entire topics. That means that what I’ve read, I’ve read out of pure interest, which means a lot more time spent and detail ingested from the readings that I have done.

The course isn’t what I thought it would be originally, or even on the second glance. It’s weird to think about it, but it wasn’t even on my short list for potential electives originally. Even once I identified this as “Actually, this should be one of my primary goals, if I get the chance,” it turns out I didn’t understand the course at all. We definitely examined some of the topics I had in mind, but… in a very, very different way than I had thought. It wasn’t “Here’s the points that you should learn,” but “Here’s the field… here’s some things to ponder, maybe… otherwise, go nuts!”

Honestly, I still like Brightspace as a system (about as much as I liked Moodle), but I do recognize that it’s… mismatched, is probably a good way to put it, against the content and format of this course. Whatever else it might be, Brightspace is not any sort of social networking platform, and the format for this course really kind of seems to work best with a relatively tight set of social interactions. If everybody is reporting on their thoughts and reflections, and everybody else can absorb that and emanate their own thoughts and reflections in return… a bit like a nuclear reaction, you need all of those thoughts emitted in an environment where they will collide with other course participants in order to spark new reactions. If everybody goes off to their own corner, then even if they reflect in private, you won’t get the same critical reaction.

The Landing might be much more optimized for this. I think you could replicate it to an extent, even without necessarily using the Landing, but… you need that relatively cohesive, tight environment in order to sustain the (social) reaction. Rather than every participant setting up their own kind of learning diary in a location and format of their choice, selecting a platform and instructing people to use it to create their learning diary would offer the advantage of being able to ensure all the participants are working in (relatively) close (social) proximity to each other. If the platform offers an easy “friends list” (or the Landing’s circles, I think?), so much the better for ensuring everybody has fast access to everybody else’s output. That would, essentially, produce more optimal conditions to sustain an increased reaction rate. But Brightspace alone is simply not going to be able to produce the conditions needed for this.

I won’t be taking two courses concurrently again if I can help it at all. I didn’t want to be taking two concurrently, but that’s how it worked out with the course offerings. And that, combined with still working full-time, made it exceedingly difficult to juggle both course loads and get my posts made on time. That didn’t help things at all, I’m sure. But, if I look on the bright(ish?) side, I can also take that as confirmation that I definitely cannot sustain that in future.

And now… to compile the portfolio and map the learning objectives. (I was rather pleased to be able to link back to the previous unit where I had remarked on creating a map, because that’s really exactly what we’re doing here… creating a map for marking the prodigious amounts of output I have verbosely blathered on to create.)

This has been a most rewarding segment of my journey. I look forward to unlocking still more in the future.

-

Week 12 Reflections

Continuing work on the Artifact.

Well, I did get a Discord bot stood up, along with my Telegram bot… and once I created a Telegram group and a Discord server and added the bots to their respective groups, I was also able to finish the setup of TediCross and cross-post between the two services. That’s a success!

Dr. Dron had some office hours this week. I’m glad I decided to attend the first one–I had fully intended to visit the last one as well, but I had overlooked a time collision there. If I hadn’t gone to the first office hour, I would have missed out entirely.

And that turned out to be really important. We’ve heard the phrase several times this term, “We shape our buildings and afterwards our buildings shape us.” (Churchill, 1943). I don’t recall whether it was this exact quote or a derivative that sparked a new thought for me this time… but the thought occurred to me that this is notably true for the community that I have in mind while building the Artifact.

Specifically, the community left another service, Slack, because certain individuals felt it was too ordered. The community was closely divided at the time, and I had originally managed to convince them of the benefits of remaining within an ordered environment while I was “managing” the community directly, but it was still a very narrow thing between the people who wanted to use the tool to collaborate and the people who disliked the tool because they wanted to keep all their socializing in one service (Telegram, in this particular locale).

Of course, once I was no longer “managing” that community, things changed. More to the point, I was replaced by the person who was leading the push to dump Slack (and all the “old ways” and “outsiders”) from the community. This they accomplished.

Anyways, it struck me that what happened was essentially a reshaping of the community to suit that particular person. Said person is no longer “managing” the community, but the legacy lives on. And while I have identified the current problems, confirmed via research that my instincts about a more effective team communication structure seem to be heading in the right direction, and even built out a theoretical, proposed solution to help the community transition into that better direction… I never stopped to delve deeply into precisely why they moved away from structured communication in the first place–or, even more importantly, why so many members of the community followed without complaint. Because there’s no point building something better if the community, as a whole, will not choose something better.

So. What did people gain by leaving a structured communication environment and going to a service where the communication was more opaque and confusing to deal with? What was the attraction? In fairness, there were probably several. I don’t deny that, for a lot of people, there’s a lot of convenience in being able to keep business and socialization in the same place. I don’t think it’s great, but so what? One of the biggest complaints about Slack was that it felt too “business-like.” And… that’s also where the complaints–from the community, at least–ended.

But what about the main person who was pushing to move things to Telegram? They happen to be a recognized narcissist, going so far as to refer to themselves as the emperor (!) of the entire city. And they take special pride in always being involved in everything they feel is even remotely important, taking any changes that might affect their access at any point in the future entirely personally. To this person, moving everything to Telegram is a huge benefit–if they are the only person in all the groups, that gives them greater information control and greater leverage in the group. (You could argue that would have the potential to increase their social capital as well, but everybody also knows the person cannot be trusted. With the one exception that they will always reliably do whatever benefits their ego the most, regardless of cost to anybody else.) The chaos personally benefits them, to the detriment of the community as a whole.

I did a bit of research on this, hunting down articles about narcissism and chaos. That was not entirely useful–I did find an article describing a “network model” of how narcissistic personalities may respond to social media conversations, but judged it ill-related to the current question. I also found Mazella’s essay about narcissistic personalities and chaos. While useful from a psychology standpoint, it didn’t tell me anything specific about how this might influence the community, or why the community might choose to follow them into a less productive, less resilient style of communication.

Eventually, however, I also came across Gruda et al.’s work on leader narcissism and follower engagement. This looks to be a solid, in-depth assessment of what traits in followers might predict greater engagement with narcissistic leaders, and that’s pretty nearly exactly what I’m hunting for. Specifically, they’ve shown that follower agreeableness and neuroticism are positively correlated with narcissistic leader interaction, while follower conscientiousness was negatively correlated and extroversion did not seem to be correlated. There’s also an effect of popularity (or perceived popularity), where the correlations are all stronger for more “popular” narcissistic leaders.

This, then, is a key that I was missing. Many people in this community are highly agreeable and want to avoid conflict. There’s also a relatively high degree of neuroticism (and also, a relatively low to moderate degree of conscientiousness–many people seem to only do things when it’s personally convenient for them). These are all attributes which correlate with greater engagement with a narcissistic leader! Far from being incomprehensible or somehow a mere error in judgement, the members of this community were already primed to follow the narcissistic leader into a more chaotic environment that personally benefited him and him alone. And that’s not something I had identified before.

So how does this change the Artifact, if at all? I’m not entirely sure that it changes anything, per se. But my perspective on it has certainly shifted. Absent “managerial direction” from a leader in the community, many people are likely to stay in the existing system, with or without a TediCross bot. But… hmm, how do I put this?

I identified a major drawback to people trying to enter Discord–without managerial input, everything of value stays in Telegram. By nullifying this drawback, I hoped that the “better system” of structured communication present in Discord would essentially be able to assert itself over time and become the preference of the community. But perhaps that was misguided of me. It is necessary to nullify that drawback, but not sufficient. Yes, people could then use Discord and enjoy the benefits… but the majority of the community’s members will clearly choose chaos willingly, if it means following a “strong” or “popular” leader. A technological measure cannot solve that conundrum alone.

And this, then, takes me back to an idea I had in Week 4, that of a “senior cadre.” At the time, I was thinking of it in terms of normalizing different viewpoints within a community in a non-threatening, even encouraging manner. If I borrow from my own idea, I now think that fostering such a group of respected “senior” members of the community, openly encouraging people to use whatever tool is most comfortable, might provide the missing component. Even absent a clear signal from the leader in any given situation, creating and reinforcing a norm of “use what you like, there’s no judgement about what’s ‘right’ or ‘allowed’, even us senior folks use a mix–some of us use Discord, others use Telegram, and we all work together in harmony anyway” may, through example, encourage some people to stick with whatever tool they feel is best. For some, that might be the chaotic-but-convenient option of Telegram. For some, that might be the more-organized option of Discord. But it will take social, person-to-person normalization to make that freedom of choice acceptable to the community as a whole.

At least, that’s what I think now.

References:

Gruda, D., Karanatsiou, D., Hanges, P., Golbeck, J., & Vakali, A. (2022). Don’t go chasing narcissists: A relational-based and multiverse perspective on leader narcissism and follower engagement using a machine learning approach. Personality and Social Psychology Bulletin, 49(7), 1130-1147. https://doi.org/10.1177/01461672221094976

Mazella, A. (n.d.). The need for conflict: The psychology behind chaos and conflict. DRMAZELLA.COM. https://www.drmazzella.com/the-need-for-conflict-the-psychology-behind-chaos-and-conflict/

-

Week 11 Reflections

Continuing work on the Artifact.

I read more through Order from Chaos. The idea of architectural control appeals to me in its elegance, but I can’t help but feel like I would be losing out on the ability to communicate norms and standards directly. To some extent, I realize, that’s the whole point–let the architecture of the system serve as the means to create boundaries for group member participation, rather than working on normative control or managerial control.

Regardless, as a practical matter, I don’t think architectural control is going to be present in this Artifact. First, I don’t think this is a good fit for the community I have in mind! We’re tied to a physical event, where people need to know how to behave as hosts and how to perform their tasks effectively. Constraining behavior through the architecture of a digital environment is not going to be sufficient. Second, if it can be used effectively, it would need a lot more time and thought to puzzle out. Particularly since I’m not 100% on how I would even begin to translate that structure into something that also works in meatspace. Third, there’s the simple fact that this particular community does remain (by necessity!) attached to an actual non-profit corporation. It would take quite a bit of… creative restructuring for the corporation to run events through a community that officially has no leadership at all. For the non-profit, there needs to be somebody officially in charge to whom authority can be delegated!

More of my time has been spent pursuing the idea of bridging Telegram and Discord. Both Telegram and Discord have bots, but I don’t have a solid understanding of the “plumbing” of either service, frankly. That said, after doing some digging and investigation, I was able to find a bot that’s intended to do exactly this–bridging Telegram and Discord. Perhaps fittingly, it’s named TediCross. (Cross-posting between “Te”legram and “Di”scord. Honestly, it’s the kind of name I would come up with, too!)

Now… it uses Nodejs. Because of course it does. I dislike Nodejs mostly because it has its own package manager. (The same way and reasons I dislike Python, for that matter.) This is a bit of a detour, but I dislike having multiple package managers doing things all on their own in a system. In Ubuntu, if you want to update pip… how do you do that? You can have one version installed by the system package manager… you’re prompted to update it, though. Okay, how? Do you have to be root? Does that do the system-level pip, or the user-level pip? Does that overwrite or break the system package? Or does it just leave it a mess? Now repeat that for most of your other packages, and I can confirm that yes, it is a mess. Any time I do anything with Nodejs or Python, I essentially have to configure a virtual machine just for that one task, to make sure it can’t contaminate anything else, and then bury it when that task is done. Contrast this with PHP, where I can just fetch the modules I want using the system package manager, or otherwise do the install myself, and there’s no messing around with separate package managers or supply-chain attacks. (Yes, I am opinionated.)

ANYWAY, I thought I’d give TediCross a try. I mean, I like the name, and it looks like it should do what I’m aiming for. I decided to run Nodejs on my Windows desktop, and I started with the standalone ZIP file. I also downloaded the TediCross stable release as a ZIP file. Then I tried to run them.

Hoo boy. Well, there’s a bunch of errors. Some of it honestly looks like the commands for NPM or other Node programs just weren’t being interpreted correctly. Right, well… fine then. I downloaded the proper installer for Nodejs and ran that. The install is relatively smooth, but then it also needs to install Chocolatey and a bunch of other stuff because some of Node’s libraries actually have to be compiled natively. Doubtless this is what was making such a hash of things before? So I do that, including waiting for Visual Studio and all the other components to come down. Even reboot my desktop.

Aaaaaand… nope. Still have a pile of errors, though the errors have changed now that the libraries can actually be compiled. I fiddled with that for a while, before deciding that maybe I should try the dev version instead of the actual stable release. This got me a LOT further, and I now have a version of TediCross that I can run on my Windows desktop. Might be a little rough around the edges, but as a proof of concept for the Artifact, I think we’re getting somewhere.

I’ve created a Telegram bot before (not successfully, but hey), so I’m at least somewhat familiar with the @BotFather. But I’ve never created a bot with Discord, so I feel like I’ll probably take that side of it a little slower, just to make sure I understand what’s being created and what needs to be set in order for this to work…

References:

Massa, F. G., & O’Mahony, S. (2021). Order from chaos: How networked activists self-organize by creating a participation architecture. Administrative Science Quarterly, 66(4), 1037-1083. https://doi.org/10.1177/00018392211008880

Sabathil, L. (n.d.). TediCross. GitHub. Retrieved July 13, 2025, from https://github.com/TediCross/TediCross

-

Week 10 Reflections

Field Trip #3: I suggested Wizard101, but I was almost expecting one of my fellow students to shoot it down. To my surprise, they did not, and we wound up there this week!

One of the biggest things I wanted to show people in Wiz was the chat systems. It’s somewhat restrictive for new users to learn, but it’s clear (to me) that a lot of time and effort went into the design and refinement, with one goal in mind–making it safe for children to use.

For those under 13, Menu Chat offers pre-selected canned phrases and commands. Some of these can be quite complex, and there’s a lot of alternate expressions that can be used for different “flavor” in communication.

Text Chat is available for users 13 and up. This is more flexible, but even then, it’s not as simple as you might expect. There’s a filter, but it’s not a blacklist. It’s a contextual whitelist. The whitelist part is easy enough–it understands “neighborhood” (the American spelling), but not “neighbourhood” (the Canadian spelling). So it allows one, but preemptively filters the other. The contextual part is more interesting to me–individually, the words “in,” “your,” and “pants” are all allowed. But string them together, and “in your pants” is immediately blocked. KingsIsle figured out quickly that it wasn’t sufficient to only block particular words; compound phrases composed of innocuous words could quickly become problematic as well. So the whitelist must also have a human-coded understanding of context.

We couldn’t test this during the field trip, because it requires actually putting money into the game, but from experience I know that Open Chat is also available for users verified 18+. That uses a standard blacklist to filter profanities, rather than the contextual whitelist. It’s therefore the most open and freest chat experience available, given it’s intended for adult users. Users can only see chat from other players at their level. So as an Open Chat user, I can say many things that will appear censored to Text Chat users. And both of those will be completely invisible to Menu Chat users. So there are indicators in the text balloons and user nameplates to show what each person is capable of seeing, in order to allow users to calibrate their outbound communications appropriately.

No, there’s no voice chat in the game, but the chat systems have had a lot of thought and design put into them, and I’ve always thought that was worthy of respect and technical appreciation.

On a more administrative level, I sort of wish we had created a channel for voice communication, rather than just text chat outside of the game. Juggling two different forms of text chat in two different applications is a little more challenging than one text chat in game and voice in the background to relay things like “And here’s how it appears when I type…” Ah well. It was still a good learning adventure, I would say.

Artifact: So. This starts with reviewing the background of what exists, why it exists, and how it’s not working.

I assist with a non-profit. It runs several community events, staffed entirely with volunteers. By design, the non-profit doesn’t want to get involved in the day-to-day of the individual events, but does provide infrastructure that is intended to last through multiple years. (Volunteers come and go, but the infrastructure needs to stick around and be maintained.) Volunteers are always a resource we could use more of. Some of our existing volunteers work for more than one event, and any event will often call for assistance from their “sister” events.

One style of event coordination is to have a series of separated groups in Telegram. Nobody sees anything except for the groups they are already in, and there’s no way for people from other events to be able to offer advice or support, unless they’re first invited into a specific group… which they may not even know exists. It’s therefore difficult to coordinate people effectively, because people in one group may be discussing one plan while the main group is discussing a different plan for the same task, and neither of them realize this until the two plans collide.

My considered solution to this was a single, centralized Discord server for the non-profit. Each event could have a “folder” of channels dedicated to them, with a single “general” catch-all for cross-event chatter. Within each event “folder,” multiple channels could be created, whether for departments or even specific events within the main event… that would be up to each individual event to determine for themselves, and they could also manage their own permissions, to determine who can post in what sections (for example, a main “announcements” channel might have posting permissions limited to specific people). Volunteers at different events could then “drop in” and get excited about what’s happening, be encouraged to assist with their sister event, and freely offer suggestions or support as needed. And since people can see what groups already exist, there’s less chance of accidentally losing track of people or having particular departments grow too isolated.

That was my thinking, at least. There’s just one slight problem–people aren’t using it. Volunteers have been “allowed” to join the Discord server, but without buy-in from the main planners in the event’s community, there’s no actual usage and therefore no momentum or critical mass which would allow the benefits to become visible.

Some of this is due to the particular event’s unwillingness to listen or be guided by those they do not judge to be part of their community. Some of it is also due to their insistence that they continue to use Telegram regardless of its less-than-optimal group interaction, even when volunteers ask for a better alternative.

This leads me to research. This is very much a problem of a social network, without having anything to do with platforms or specific services (at least as I see it). Yet I wasn’t entirely sure how to phrase what I was looking for, and my search ended up being somewhat general to groups, social networks, and chaos. (This was mostly because I wanted to determine what about a group might pull it towards a chaotic system of hidden subgroups rather than an ordered approach of group-visible subgroups.)

Two research papers caught my attention, and I’m reading through them now. The first, Order from Chaos, is an interesting read (and a long one, which is why I’m still digging through it!) about how collectives might try to avoid “managerial control” and still deal with the challenges of coordinating collective action for a common purpose. It caught my eye largely because that’s exactly the struggle I can see in this particular group–they don’t want to be “managed” or controlled in any way, and especially by any “outsiders” to their community. (Which still frustrates me–their definition of “community” is geographically-bound and takes no account of other commonalities.) So possibly I might glean some alternate thoughts on structure from this. But, this group does exhibit a desire for some managerial control; they’re just choosy about whom they allow in that role. So I’m not entirely sure that this entirely fits the bill. I’m not trying to rewrite their entire internal structure, just trying to figure out what they might be seeking by avoiding an ordered, open approach to their internal communications and coordination.

(I did find it particularly funny when I read the line: “Despite the shared motives of individuals devoted to direct, inclusive participation, their intentions for social change often dissolved into an ‘endless meeting’ in which they struggled to make decisions.” That is 100% what happens, every single damn time! Everybody’s frustrated when no decisions are made and there’s so much time spent, but everybody’s frustrated when somebody else makes a decision or says “we’re out of time to spend on this one topic.”)

Team Social Network Structure and Resilience was a shorter read, and was a fascinating simulation of team performance using either random or scale-free-like patterns of interaction. The analysis confirms what I would expect, namely that a higher-degree of interaction among everybody on a team leads to a greater degree of resilience and higher performance. The even distribution of a random pattern provides this. In real terms, it never hurts to hear ideas from people outside your department as well as inside of it. If you only listen to people in your specific department, there is a much higher risk of groupthink, tunnel vision, and other social conditions. Plus, as a volunteer organization, it’s really quite difficult to get up any sort of excitement and motivation if nobody knows what you’re doing, and you don’t know (and can’t find out) what anybody else is doing.

The alternative that was examined, scale-free-like pattern, is somewhat similar to the existing setup, but not completely. There are indeed only a few hubs–people who are in multiple subgroups–but I expect there’s more interaction within the subgroups among those members present. This seems like it would be more like what is identified as a block-diagonal pattern, which is more modular. But for my purposes, I think it’s reasonable to say the open-access (randomized) pattern seems to be a better option to shoot for.

So… how to do it. To some extent, I think the biggest problem so far has been that, absent a managerial decision to push towards Discord, there’s really no benefit for anybody to be in Discord. There’s no conversations happening there, and you have to be in Telegram in order to see anything or get anything done. But what if those two were bridged, such that people in Discord could be heard in Telegram, and people in Telegram could be heard in Discord? This is what I think I want to experiment with, at least right now.

References:

Massa, F. G., & O’Mahony, S. (2021). Order from chaos: How networked activists self-organize by creating a participation architecture. Administrative Science Quarterly, 66(4), 1037-1083. https://doi.org/10.1177/00018392211008880

Massari, G. F., Giannoccaro, I., & Carbone, G. (2023). Team social network structure and resilience: A complex system approach. IEEE Transactions on Engineering Management, 70(1), 209-219. https://doi.org/10.1109/TEM.2021.3058237

-

Week 9 Reflections

I like teaching people who want to learn. Functionally, it’s just talking, but I like to put the material in a context and framework that (ideally) helps it make sense. I also like to make sure people understand the “why” and not just the “is.”

With that said, I think I started by approaching this incorrectly. Mm… perhaps not “incorrectly,” so much as incompletely. I wasn’t focused so much on making sure that the topic had references and additional information, so much as I was thinking about explaining something I had already been digging into. But adding the references 100% made it stronger and added more robustness to what I was writing. And as a definite bonus, I got to read up on Stick, and now I’m looking forward to seeing more potential social applications implementing that (or something like it).

Normally, I shoot videos pretty off-the-cuff. I’ve tried writing out a “script” before, and it wound up feeling incredibly artificial and a little forced. (Plus, technical issues with pacing.) But in this case, I think my second attempt at the explanation (when I wrote it out underneath the video in the post) was stronger and more cohesive. It also gave me the chance to re-think and re-order some of my thoughts, or to go “You know, this doesn’t really work as a cohesive part of the entire discussion, how can I rephrase and refocus it?” If I was going to do this again, I might consider writing out the explanation first–not so much as a “script” of any sort, but just for the exercise of collecting and sequencing my thoughts appropriately. Then I can still shoot the video part spontaneously, but with a more refined idea of how to approach what I want to share.

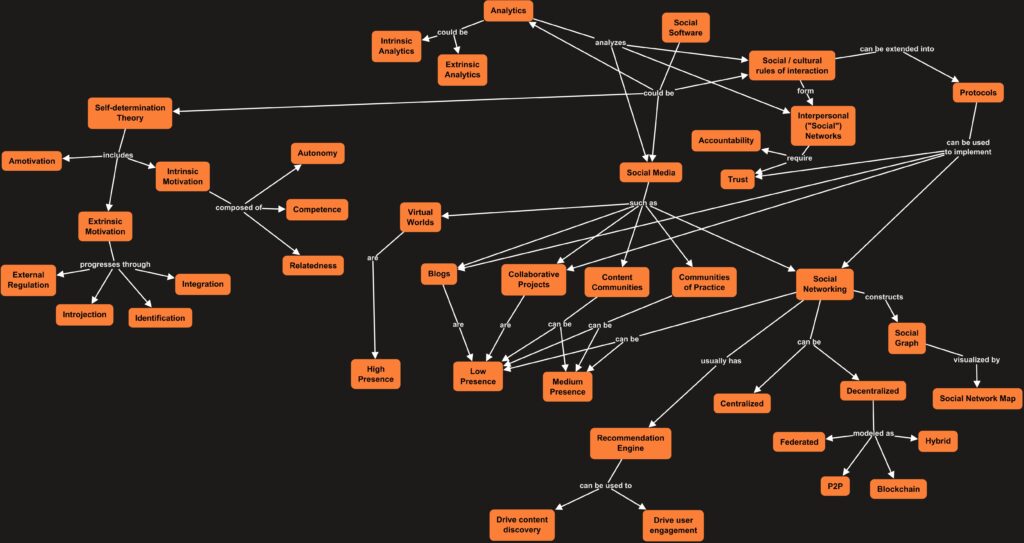

The concept map was a bit of a challenge. From where I sit right now, I can certainly identify some concepts, but not as many as I would have thought. I feel like a lot of my exploration during this course has been less about learning entirely new concepts, and more about the exploration of existing concepts. Plus a bit of the usual (for me) gluing together of thoughts and insights across various areas. (And honestly, I think the thing I learned that excites me the most is the section on self-determination theory! That little section alone is going to be something I’m digging into for years, honestly!)

-

Week 9: Concept Map

So, we needed to put together a concept map for what we’ve been exploring in this course, at least as I have experienced it! I have no doubt that I’ve drawn in items that others don’t feel figured heavily, and conversely I’ve probably omitted things people may have thought were especially key.

Still, for all the inherent limitations, here’s what I’ve produced.

-

Week 9: DIY Unit

Federated, Decentralized, and E2EE Networks: Similarities, Differences, and Trade-offs

(TL;DW)

If you think about the history of computing, there’s a general trend that appears:

When a new advancement is made, it tends to be very large, very expensive, and usually only available to those who can afford the extravagance (or have / convince themselves they have great need). But as long as you have the money, space, or whatever resource is required, there’s no real barrier to getting one–there’s so few instances of the new tech out there, you can’t really even hope to connect it to get any sort of network benefit.

As time goes on and the new technology becomes more affordable, it typically enters the realm of “too expensive for the home, but businesses might have one.” In this phase, there’s a powerful incentive for businesses to create network effects, often by advancing their own patented or unique revision of the technology, in an effort to first convince people to use it, then make it difficult or costly for them to leave it, then use that increasingly captive audience to convince others to join their particular walled garden.

But as the technology continues to advance and becomes faster, smaller, cheaper… there’s more capability for people to begin making use of it at home. And naturally, they want to use it in the way that suits them–which is not always the way the businesses who created the walled gardens want them to use it. Over time, the growing ubiquity of the new technology can result in people overcoming network effects just because of the sheer number of people working against those effects in all their myriad ways.

Now, let’s think about social computing in this fashion. The earliest stages of social computing were very much about protocols, but they were somewhat unwieldy to employ and required a lot of resources to use, tied as they were to large computing systems and communication infrastructure that was rather resource-intensive.

As time passed, these large systems became easier to set up and use, but… communications infrastructure was still lacking, and running large websites at home was frequently infeasible. This led to a centralization effect, where a site managed by a business could benefit from having those network effects–more users could mean more revenue, which allowed them to expand the site. It had the potential to be a positive feedback loop, if the effects of greed and profit-seeking were kept at bay.

But over time, the technology available to us has continued to increase. We have more powerful computers, and always-on broadband connections are much more commonplace. We could very well be approaching the time where people can pull their “social networks” (in the sense of a service or a site) back out to the edges of the network, rather than keeping them held in a centralized location.

And this leads us to decentralized networks!

There’s certainly more than one style of decentralized network, taken as an umbrella category. If you want to replicate the look and feel of something such as Twitter, perhaps you might start by creating your own Twitter-like website that you can run on your own equipment. And hey, maybe somebody else runs their own copy of the software, too. A protocol can connect the two of them so you can see each other’s activity–this is a federated system.

I would categorize a federated system as a midpoint along the continuum of decentralization. It’s still a somewhat-centralized service, after all. The admin may federate with other servers… or they might not. You still have to find and choose what server you want to sign up under, and if you go create a new account on another server, well… that’s a different account on a different server, and you might need to rebuild your post history and your social graph from there.

There are other styles of decentralized network–P2P and blockchain are two other mentioned by Jeong et al. (2025). But if you continue pulling the social network out to the edge, the logical endpoint is a system where everybody has their own “site.”

The example I would use of something like this would be Bluesky and the AT Protocol, which you may have read about in Unit 8! Under the AT Protocol, every user has a globally unique decentralized identifier. The human-readable handle can be changed, but the DID remains unique, no matter where you go. And you can go wherever you want–the DID can be updated to point to a personal data store, or PDS, that can be placed wherever you choose. It can be on Bluesky’s servers, or you can run it yourself. That PDS contains your posts, your social graph, and all the data that is “yours.” If you want to go somewhere else, you simply move your PDS to a new location, with no need to rebuild from scratch.

By contrast, end-to-end-encrypted (E2EE) networks are a different breed entirely. They certainly can be decentralized… but that introduces new challenges.

In an E2EE network, everything is encrypted at the endpoints before being transmitted. No middle points along the path can read the traffic. That’s already a benefit. Of course, metadata and traffic flow analysis can be used to infer other data about the communications, and so E2EE is often also implemented in a completely decentralized fashion.

But one of the biggest challenges of E2EE is key management. That is, if the encryption keys are only managed at the endpoints… how do new endpoints exchange those keys? If I only accept encrypted traffic, how do you send me a message to ask for my encryption keys? I would have to post my keys somewhere, and before you could try to talk to me, you would have to find wherever I put my keys.

In the realm of OpenPGP, keyservers are a common answer to this quandry. But a keyserver is a point of centralization–the bigger a keyserver becomes, the more useful it is for people to use it, and the more the network will de facto depend on it. So already, we see where centralization still plays a very important role in making E2EE networks convenient for users.

Signal is an example of an E2EE network, but still maintains a centralized service to facilitate key exchange and introduction. But because keys are essentially tied to the endpoint devices, the loss or rotation of a device can mean the entire messaging history is lost. This would not be acceptable in a social network. More recently, a newer E2EE protocol (based on the Signal protocol) has been developed to try to extend the benefits of E2EE to social networking applications as well.

Decentralized and E2EE social computing is an area of social computing that has held my fascination for a long time, and probably will in future as well! Hopefully this was an interesting overview of this area for you, too!

References:

Basem, O., Ullah, A., & Hassen, H. R. (2023). Stick: An end-to-end encryption protocol tailored for social network platforms. IEEE Transactions on Dependable and Secure Computing, 20(2), 1258-1269. https://doi.org/10.1109/TDSC.2022.3152256

Jeong, U., Ng, L. H. X., Carley, K. M., Liu, H. (2025, March 31). Navigating decentralized online social networks: An overview of technical and societal challenges in architectural choices. arXiv preprint. https://doi.org/10.48550/arXiv.2504.00071

Masnick, M. (2019, August 21). Protocols, not platforms: A technological approach to free speech, 19-05. Knight First Amendment Institute. https://perma.cc/MBR2-BDNE

-

Week 8 Reflections

The reading this week was less structured than usual, if only by virtue of the fact that there are many possible protocols to read up on, and not all of the documentation for them is… especially readable, let’s say.

Still, it was good. It was good to refresh myself on OpenID and Signal. ActivityPub and AT Protocol are newer, and I hadn’t read up on them at all. I was slightly familiar with Mastodon and Bluesky, but I honestly had a lot of misconceptions about the limitations of Bluesky–AT Protocol is much, much my decentralized and flexible than I had understood it to be. I actually have a lot more respect for AT now. (And somewhat less for ActivityPub, if I’m honest? Why do all actors have to have a record of what they’ve liked? It’s a design choice, I grant you, and clearly the protocol is working, but… well, I guess I just disagree with the wisdom of that design decision.)

Designing my own protocol, on the other hand, was a lot of fun! I feel like I got too deep into the weeds, working out lots of little details and losing sight of some of the big-picture stuff… I tried to avoid that by writing out the top-level goals first, but I still feel like my mind wandered. It’s very much a first draft, not a finished product–there would be a lot of refinement needed, complete with streamlining and reorganizing the “document” (such as it is) to make sure everything was logically structured. Still fun, though. It was an opportunity to let the geek out a bit.

I do feel like the protocol I came up with is very strongly based on AT Protocol. That’s a sign of how impressed I was with it, I suppose. But I also wanted to get a little bit of the flavor of the old LiveJournal access controls in there, and Signal’s use of multicast encryption for group chats was also a solid idea. I’m not as strongly versed in the details of multicast encryption as I am normal public/private key encryption, but if it’s in Signal, it’s been vetted and is cryptographically sound. May as well use it where it will be of benefit! (I’m not entirely convinced I’m using it correctly in my spec, but that would be something to be re-addressed in later revisions.)

I might actually dig more into AT Protocol and join that network. The fact that I can use my own domain and my own PDS is a strong plus. Even without access controls, there’s some limited utility in having a short-form broadcast point available that isn’t ExTwitter. (Which I was never happy with and only signed up on because, if I didn’t, somebody else was going to create the account for me.) Mastodon was interesting to research, but I don’t think it’s the right network for me.

Never thought I’d come out of this course all fired up on the technical details underpinning Bluesky, but there you go: we never know where the learning journey will take us. That’s why we have to keep an open mind along the way!

-

Week 8: Technical Standards, APIs, and Protocols

I’m going to consider these protocols:

- OpenID (https://en.wikipedia.org/wiki/OpenID)

- ActivityPub (https://www.w3.org/TR/activitypub/, https://en.wikipedia.org/wiki/ActivityPub, and https://www.w3.org/wiki/ActivityPub/Primer/Authentication_Authorization)

- AT Protocol (https://en.wikipedia.org/wiki/AT_Protocol and https://atproto.com/guides/faq)

- Signal Protocol (https://en.wikipedia.org/wiki/Signal_Protocol)

I was actually a user on LiveJournal back when Brad Fitzpatrick launched OpenID. And for a while, it was quite nice to be able to use a single identity provider for everything. The promise of convenience and being able to have one canonical digital “you” that everything could be linked to was quite alluring.

Of course, as Facebook and Google grew ever larger and encompassed more and more of the Web’s active social services… well. The attraction died. And I realized something important–perhaps it was more secure to simply create many sharded identities at the various sites. Because I didn’t want Facebook, or Google, or indeed any identity provider, to be the sole key to my digital identity. What happened if that provider went away–bankrupted, or sold off, or just blown offline by some large-scale attack? And as long as every site used a different set of credentials, compromise at one could not compromise all. So using individual logins was a win for both security and resiliency.

I like that the Wikipedia article for OpenID explicitly draws some comparisons to OAuth, because I think that’s also replaced OpenID to a large extent. Both involve authenticating through an external identity provider (IdP). However, behind the scenes, OpenID has both sites talking to each other and verifying the identity, while OAuth essentially hands a token to the user, who hands it to the second site, who uses the token to query the first site through whatever API it offers.

In some ways, OAuth has more applicable contexts, because it’s designed to allow users to delegate access even to non-interactive devices. However, OAuth has a major issue if it’s not set up correctly with HTTPS: A token is not typically single-use. If either end-device or connection flow is compromised, the token can be stolen and re-used to gain access, and possibly even persist access by using the stolen token to renew and gain a new token even when the original application is no longer active. The same issue would, in fairness, arise if OpenID was used without HTTPS, but the use of a nonce and live authentication would at least potentially pose a somewhat more complicated challenge to replay later.

ActivityPub is clearly designed off a general social media platform paradigm. That “followers,” “following,” and “liked” are required attributes of any “actor” in the protocol says a lot, in my mind. There are other optional attributes, but those are always required. So the assumption that “likes” are a thing is baked right in there, as are the assumptions that people using this protocol want to “follow” each other and allow others to follow them.

The discussion around how server are essentially taking messages from actors’ outboxes and posting them into other actors’ inboxes is reminiscent of SMTP, of course. But then there’s the line that says that “Attempts to deliver to an inbox on a non-federated server SHOULD result in a

405 Method Not Allowedresponse.” So… the ability to deliver a message to an inbox depends on the two servers being federated. But I don’t see anything in this protocol description as to how this federation occurs. Worse, I see a note that while ActivityPub uses authentication in server-to-server federation, there’s no standard for this??? Only a list of best practices? Yo, if you’re going to say that non-federated deliveries should fail, you should definitely have a spec for how federation should occur! Without a spec, people are going to bodge that stuff, and it’s not always going to be pretty!In fact, I currently think that’s possibly the biggest weakness in ActivityPub (that I see), at least as far as interoperability goes. Theoretically, compatible servers should be able to use the protocol to send between implementations–and the protocol already notes that clients may or may nor be able to edit / interpret the original “source” used to create the rendered “content” in the messages. But without a standard for federation… aren’t we basically left in a situation where everybody basically does what seems good to them, with maybe a nod to “best practices” in concept, but with everybody doing their own specific twist along the way? That seems like a recipe for a very fragmented ecosystem, where various implementations can only reliably talk to themselves, using whatever setup method is deemed acceptable for setting up federation.

Now let’s talk about AT Protocol! The more I read, the more I like it… the entire design of the protocol revolves around a different concept entirely. Rather than focusing on a user-centric model of an inbox and an outbox, AT Protocol conceives of the entire social network from the perspective of all the various services needed to power the network in a decentralized fashion. Handles are linked to domain names and keys published via domain names or web servers. All the data in the network is stored within personal data servers (PDSs) for the various accounts. Updates to the network are posted to the various PDSs. These updates are gathered and collated by relays. App views can then display the collected content of the network, with authoritative data always available through the PDS which originated any specific post. I touched on this back in Week 5 (https://comp650.nextdoornetadmin.ca/2025/05/28/week-5-analyzing-social-systems-part-2/), but the app views can also use different algorithms to alter the view of the network at the user’s request.

This structure is designed to allow any part to be swapped out or replaced at any point. Users can change their handles to use their own domain names, and pull their entire store of data into another PDS at will. Importantly, the PDS contains all the user’s data, including posts, friends, and blocks. The user’s social graph is always ultimately under their control, and cannot be shut down because the user can always retrieve their data (which they control with their own cryptographic keys) and move it to another PDS. Similarly, if a particular relay decides to censor the network by refusing to crawl particular PDSs, anybody can set up another relay to collate the network’s data. Applications offering an app view of the network can be swapped out, and so can the algorithms responsible for ordering and displaying the data.

At a technical level, this strikes me as a censorship-resistant protocol, but rather than attempting to counter a network-level adversary that controls and filters network access, the goal here is to counter corporate or organization-level control of the server infrastructure that drives the network. (This is complementary to something like Tor, and you could even theoretically extend this to running PDSs at .onion hidden services.) This also means that the network cannot easily be enshittified, because by design there can be no lock-in–all components can be swapped out if they no longer serve the user’s interests.

What does stick out to me right now is that by design, all data in the PDS is public. There are no access controls. Users can choose to block individuals, but that only prevents them seeing the blocked user–their own content is still visible to the blocked user. I suspect this is, to a large degree, a function of making the network so resistant to centralized control. In that setup, no user can unilaterally impose their will on another, and the same is true for any organization that would try to moderate content. There are publicly-sourced block lists, and moderation tools that can add some metadata to posts, but it’s up to individual app views or relays to respect those configuration choices; it can’t be completely enforced within the social network.

I’ve been using Signal for quite some time, and I have a massive amount of respect for the Signal developers. The Signal protocol itself is different from Signal the application, it is important to note. The Signal protocol can be (and has been) implemented within other applications (such as WhatsApp).

The Signal protocol is also somewhat censorship-resistant, but it has been designed more for security than for other purposes. To this end, the protocol ensures that messages being sent between participants are encrypted end-to-end, so that the service operator cannot see what is being exchanged. Of course, this still leaves visible the metadata of who is doing the communicating. A further development was to allow “sealed sender”, where the sender’s identity can be obscured to the server, which only need know the recipient’s identity. The use of the Axolotl ratcheting algorithm (later renamed the Double Ratchet Algorithm) also ensures that even a compromise of the message stream will be eliminated as the keys are rotated and exchanged between the endpoints.

Importantly, while the protocol is verifiably secure, the applications and end devices are “out of scope” for those assurances. It’s entirely possible for a secure protocol to be used in a very insecure application. It’s also very possible for it to be used in a messaging application run by an untrustworthy company that makes its money by tracking its users and their communication patterns (*cough* Meta *cough*). As is usually the case with an E2EE protocol, the centralization is used for necessary purposes including exchanging public keys and relaying messages when either end device is temporarily offline. No facility is provided for a user to move to a different application using the protocol while bringing their entire social graph with them; Signal Protocol is only concerned with securing messages between endpoints.

Now… how would I design a distributed / federated social platform? Well… let’s start with establishing design principles. In no particular order,

- User data should be controllable by the user. Similar to AT Protocol, this should include their unique preferences, friend list, and block list.

- Users can establish a list of preferred or mandatory data stores. This can be a data store under their personal control, or it might be a store administered by a trusted contact. By default, the network will attempt to store “posted” data to three separate stores for redundancy. Reducing the number of data stores below this number should trigger a warning to the user.

- Data stores have a configurable storage limit per user. Users cannot post into the network unless they have sufficient space remaining.

- Every user has a unique handle and public/private keypair. This is used to sign outbound posts into the network, asserting the user’s ownership. Public posts and media are only signed and otherwise cleartext. Posts may also be restricted to specific viewers by encrypting those posts and media to the receivers’ public keys.

- When data is transmitted into storage, the client also encrypts the data to the store’s public key. This is necessary to ensure that data store operators can scan for and remove illegal materials. All material is still signed with the owner’s keys, so it can be verified that a data store has not altered or tampered with the stored material–it should be either entirely present, or not at all.

- The first post into the network should be the user’s profile, including their public key and list of data stores.

- Existing posts can be edited, including for key rotation, by providing proof of knowledge of the previous private key used to post them.

- When posting into the network, clients may also send a short message indicating a new post was submitted from the user (and bound for “public” or alternately a list of specified handles), along with a unique ID for that post, to a relay server. Relays gossip amongst themselves to share updates which they know about. Posts which are not sent to a relay are quasi-“private”, but are still available if directly retrieved (though their access controls remain intact via the list of recipients for whom they are encrypted).

- Relay servers serve as an index of user profiles, and sender/receiver/post ID triplets.

This is conceptually similar to the AT Protocol, except with additional access controls to control post visibility, as well as adding redundancy for the network’s content. This protects against failure or unavailability of a personal data store.

A PDS installation should be configurable to allow whitelisted content (for private / friend group storage) or public content. Servers may allocate storage to users based on defaults or based on configured amounts linked to the handle / keypair. This may even allow users to establish commercial relationships with storage providers if desired (but never because it’s required–users can always run their own PDS, and can choose to have fewer than three storage instances if desired, though that reduces reliability).

Because posts and other material aren’t directly accessed by the relay servers, they should be relatively fast at serving the indexes described. Clients can perform a lookup of a user, a user’s posts, or posts destined for them, caching the data retrieved. Clients may display inbound posts by locating the PDS for the sending user and then retrieving the post content from any of the listed PDSs that can provide it. Clients may also be explicitly given a PDS link to retrieve, if a post is not announced to a relay. Clients posting content will attempt to directly store their content directly on all authoritative PDSs; if one is unavailable, an alert should be raised and the client should retry the storage operation later or request a functional PDS to perform a sync to the unavailable PDS later.

Locating a user’s profile will also grant access to the user’s public key, allowing posts to be directed toward that user (provided the receiving user has not instructed their client to block display of posts from the sending user). If the user has chosen to make this knowledge publicly available, their list of friends may also be posted, allowing others to traverse the network and find additional connections.

Key rotation is explicitly encouraged, as is data transfer. Servers should be prepared to locate all stored information by key and accept edits (including a new signature over the post) or export it to the client or a new PDS. Such commands or “system posts” are, like regular posts, signed with the handle’s known keypair to authenticate the command.

Relays not only gossip information about new posts, but (by default) about connections to other relays as well. This should allow a new relay to “bootstrap” with only a few connections to existing relays.

Groups may be created by a special “system post” which creates a new handle and keypair for multicast encryption. The creating user is registered as the “owner” of the group, which then creates a first post as the group’s “profile” as with a regular account, including a list of PDSs which the group will use. Group modifications, including to the list of owners and moderators, are approved by the group owner(s). Moderators are given “edit” access to the group’s posts. The group account is then instantiated on the PDSs as a clustered service, using appropriate primary / backup election methods to ensure that the group can respond to incoming input autonomously.

Group members may post to the group by sending a regular post destined only to the group; posts with a mix of group and regular recipients must be rejected. When the group account receives a post destined for it, the group account “wraps” the inbound post and rebroadcasts it using the group’s multicast encryption key. Moderators may delete the post if it is deemed inappropriate, which causes the group to blacklist that specific post ID (to prevent re-transmission). The posting user may also edit their message as normal, and the group should re-wrap and re-broadcast it as normal when the edit is detected. If the original user deletes their message, the group deletes its copy as well.

Because the group’s key is established for multicast encryption, users who have joined the group can instruct their clients to include posts from the group account, and messages from that group will be decrypted using multicast encryption rather than the user’s personal keypair.

It is anticipated that very popular groups may require their own set of private PDSs specifically to handle group content, particularly as this must be “re-broadcasted” under the group’s own account. This will, however, take the load of running the “group” account off any public PDSs, as well as alleviating the storage burden and possibly increasing performance.

A completely self-contained network can be created by initiating a relay with external connections disabled. If a client posts to its own private PDS, and notifies only the disconnected relay, then a social network of one user is the result. A second relay can be added by permitting external connections only to a specified target, and the self-contained network can grow from there.

I explicitly envision this being able to run within Tor as a (set of) hidden service(s). Though I’m sure the devil is in the details… it’s kind of a rough outline, I know.

-

Week 7 Reflections

Not as much reading this week, largely because I had already read several of the suggested resources for this unit in previous weeks! Which probably says something about how I approach social technology, all in all. The phrase “eyes wide open” comes to mind.

Honestly, I’ve been navigating the issue of privacy for a long time, including the moral and ethical issues involved. I know that “privacy” can be a double-edged sword. I run a Tor relay node, and I’ve taken a stab at running exit nodes in the past. Some would ask “Why??? Isn’t that the dark web, where all the criminals and drug lords are?” Except, as with so many things, it’s more than that. The Tor network is also the largest (and one of the most durable) censorship-resistant networks on the planet. When users in China or Iran want to bypass the content restrictions placed upon their network access, Tor is one of the ways they can reach the outside world.

The tradeoff for allowing people more freedom is that more harms will result. On balance, I believe that allowing people to bypass content controls is the greater benefit, because the alternate path of allowing governments and corporations to dictate what we are “permitted” to see and learn would eventually extinguish all free thought. Further, controls affect the honest people first–it’s not criminals and ne’er-do-wells who are immediately curtailed. And for those who say they have nothing to hide: that’s good to hear; now may I please have your name, social insurance number, date of birth, and mother’s maiden name? The truth of the matter is, we all have some information that we fully expect to keep private, hidden from those who we judge have no need to know.

So in some ways, this unit was easier to navigate for me, if only because I’ve been actively working in and thinking about this material for easily 15+ years. But revisiting highly negative experiences is never easy.

A lot of my thinking is that all the ethical questions end up coming down to individual decisions. Whom do you trust? You may never know what their decision would be… but can you at least trust that they will think deeply about it and have an informed moral framework that they will try to employ while balancing the various goods and harms for those affected?

The most difficult thing for me is finding the people whom I would trust to have those conditions in operation. I’m a big believer in the idea that those who demand your trust are not worthy of it. I can offer myself for service; I can’t demand it. Further, if I am to be worthy of trust, I cannot demand that I be the only person holding the keys. There has to be more than one person who can intervene, because that’s just good system design. A single “trusted” person is a single point of failure at higher risk of attack or subversion.

As an admin, I also believe strongly in a modified version of the phrase “Power corrupts; and absolute power corrupts absolutely.” My modification is that it’s not the possession of power that corrupts, but the usage of power. If I hold all the keys but never permit myself to use them, then I am not corrupted. But every time that power must be used, no matter how good the usage was, it corrupts the soul a little bit. The taste of power employed is, for many people, an extremely addicting feeling. I am not immune to this. My goal in any sort of admin situation is to avoid having to use the tools of power, because if ever I start to view power as a right or an entitlement, then I am no longer fit to be an admin.

If more people held to such an approach, informed themselves about the ethical dilemmas involved, and committed to getting involved and sharing the load (in any community!)… would the outcome not be a greater use of ethical principles in society? Greater understanding of each other’s struggles in determining the best course of action, and therefore more tolerance towards honest leadership? Would that not be an overall benefit?