I’m going to consider these protocols:

I was actually a user on LiveJournal back when Brad Fitzpatrick launched OpenID. And for a while, it was quite nice to be able to use a single identity provider for everything. The promise of convenience and being able to have one canonical digital “you” that everything could be linked to was quite alluring.

Of course, as Facebook and Google grew ever larger and encompassed more and more of the Web’s active social services… well. The attraction died. And I realized something important–perhaps it was more secure to simply create many sharded identities at the various sites. Because I didn’t want Facebook, or Google, or indeed any identity provider, to be the sole key to my digital identity. What happened if that provider went away–bankrupted, or sold off, or just blown offline by some large-scale attack? And as long as every site used a different set of credentials, compromise at one could not compromise all. So using individual logins was a win for both security and resiliency.

I like that the Wikipedia article for OpenID explicitly draws some comparisons to OAuth, because I think that’s also replaced OpenID to a large extent. Both involve authenticating through an external identity provider (IdP). However, behind the scenes, OpenID has both sites talking to each other and verifying the identity, while OAuth essentially hands a token to the user, who hands it to the second site, who uses the token to query the first site through whatever API it offers.

In some ways, OAuth has more applicable contexts, because it’s designed to allow users to delegate access even to non-interactive devices. However, OAuth has a major issue if it’s not set up correctly with HTTPS: A token is not typically single-use. If either end-device or connection flow is compromised, the token can be stolen and re-used to gain access, and possibly even persist access by using the stolen token to renew and gain a new token even when the original application is no longer active. The same issue would, in fairness, arise if OpenID was used without HTTPS, but the use of a nonce and live authentication would at least potentially pose a somewhat more complicated challenge to replay later.

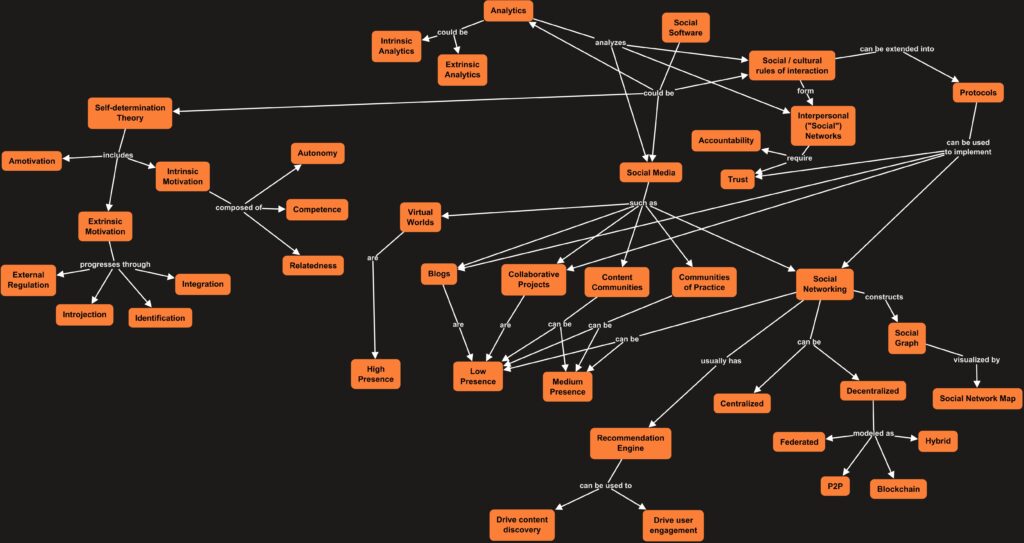

ActivityPub is clearly designed off a general social media platform paradigm. That “followers,” “following,” and “liked” are required attributes of any “actor” in the protocol says a lot, in my mind. There are other optional attributes, but those are always required. So the assumption that “likes” are a thing is baked right in there, as are the assumptions that people using this protocol want to “follow” each other and allow others to follow them.

The discussion around how server are essentially taking messages from actors’ outboxes and posting them into other actors’ inboxes is reminiscent of SMTP, of course. But then there’s the line that says that “Attempts to deliver to an inbox on a non-federated server SHOULD result in a 405 Method Not Allowed response.” So… the ability to deliver a message to an inbox depends on the two servers being federated. But I don’t see anything in this protocol description as to how this federation occurs. Worse, I see a note that while ActivityPub uses authentication in server-to-server federation, there’s no standard for this??? Only a list of best practices? Yo, if you’re going to say that non-federated deliveries should fail, you should definitely have a spec for how federation should occur! Without a spec, people are going to bodge that stuff, and it’s not always going to be pretty!

In fact, I currently think that’s possibly the biggest weakness in ActivityPub (that I see), at least as far as interoperability goes. Theoretically, compatible servers should be able to use the protocol to send between implementations–and the protocol already notes that clients may or may nor be able to edit / interpret the original “source” used to create the rendered “content” in the messages. But without a standard for federation… aren’t we basically left in a situation where everybody basically does what seems good to them, with maybe a nod to “best practices” in concept, but with everybody doing their own specific twist along the way? That seems like a recipe for a very fragmented ecosystem, where various implementations can only reliably talk to themselves, using whatever setup method is deemed acceptable for setting up federation.

Now let’s talk about AT Protocol! The more I read, the more I like it… the entire design of the protocol revolves around a different concept entirely. Rather than focusing on a user-centric model of an inbox and an outbox, AT Protocol conceives of the entire social network from the perspective of all the various services needed to power the network in a decentralized fashion. Handles are linked to domain names and keys published via domain names or web servers. All the data in the network is stored within personal data servers (PDSs) for the various accounts. Updates to the network are posted to the various PDSs. These updates are gathered and collated by relays. App views can then display the collected content of the network, with authoritative data always available through the PDS which originated any specific post. I touched on this back in Week 5 (https://comp650.nextdoornetadmin.ca/2025/05/28/week-5-analyzing-social-systems-part-2/), but the app views can also use different algorithms to alter the view of the network at the user’s request.

This structure is designed to allow any part to be swapped out or replaced at any point. Users can change their handles to use their own domain names, and pull their entire store of data into another PDS at will. Importantly, the PDS contains all the user’s data, including posts, friends, and blocks. The user’s social graph is always ultimately under their control, and cannot be shut down because the user can always retrieve their data (which they control with their own cryptographic keys) and move it to another PDS. Similarly, if a particular relay decides to censor the network by refusing to crawl particular PDSs, anybody can set up another relay to collate the network’s data. Applications offering an app view of the network can be swapped out, and so can the algorithms responsible for ordering and displaying the data.

At a technical level, this strikes me as a censorship-resistant protocol, but rather than attempting to counter a network-level adversary that controls and filters network access, the goal here is to counter corporate or organization-level control of the server infrastructure that drives the network. (This is complementary to something like Tor, and you could even theoretically extend this to running PDSs at .onion hidden services.) This also means that the network cannot easily be enshittified, because by design there can be no lock-in–all components can be swapped out if they no longer serve the user’s interests.

What does stick out to me right now is that by design, all data in the PDS is public. There are no access controls. Users can choose to block individuals, but that only prevents them seeing the blocked user–their own content is still visible to the blocked user. I suspect this is, to a large degree, a function of making the network so resistant to centralized control. In that setup, no user can unilaterally impose their will on another, and the same is true for any organization that would try to moderate content. There are publicly-sourced block lists, and moderation tools that can add some metadata to posts, but it’s up to individual app views or relays to respect those configuration choices; it can’t be completely enforced within the social network.

I’ve been using Signal for quite some time, and I have a massive amount of respect for the Signal developers. The Signal protocol itself is different from Signal the application, it is important to note. The Signal protocol can be (and has been) implemented within other applications (such as WhatsApp).

The Signal protocol is also somewhat censorship-resistant, but it has been designed more for security than for other purposes. To this end, the protocol ensures that messages being sent between participants are encrypted end-to-end, so that the service operator cannot see what is being exchanged. Of course, this still leaves visible the metadata of who is doing the communicating. A further development was to allow “sealed sender”, where the sender’s identity can be obscured to the server, which only need know the recipient’s identity. The use of the Axolotl ratcheting algorithm (later renamed the Double Ratchet Algorithm) also ensures that even a compromise of the message stream will be eliminated as the keys are rotated and exchanged between the endpoints.

Importantly, while the protocol is verifiably secure, the applications and end devices are “out of scope” for those assurances. It’s entirely possible for a secure protocol to be used in a very insecure application. It’s also very possible for it to be used in a messaging application run by an untrustworthy company that makes its money by tracking its users and their communication patterns (*cough* Meta *cough*). As is usually the case with an E2EE protocol, the centralization is used for necessary purposes including exchanging public keys and relaying messages when either end device is temporarily offline. No facility is provided for a user to move to a different application using the protocol while bringing their entire social graph with them; Signal Protocol is only concerned with securing messages between endpoints.

Now… how would I design a distributed / federated social platform? Well… let’s start with establishing design principles. In no particular order,

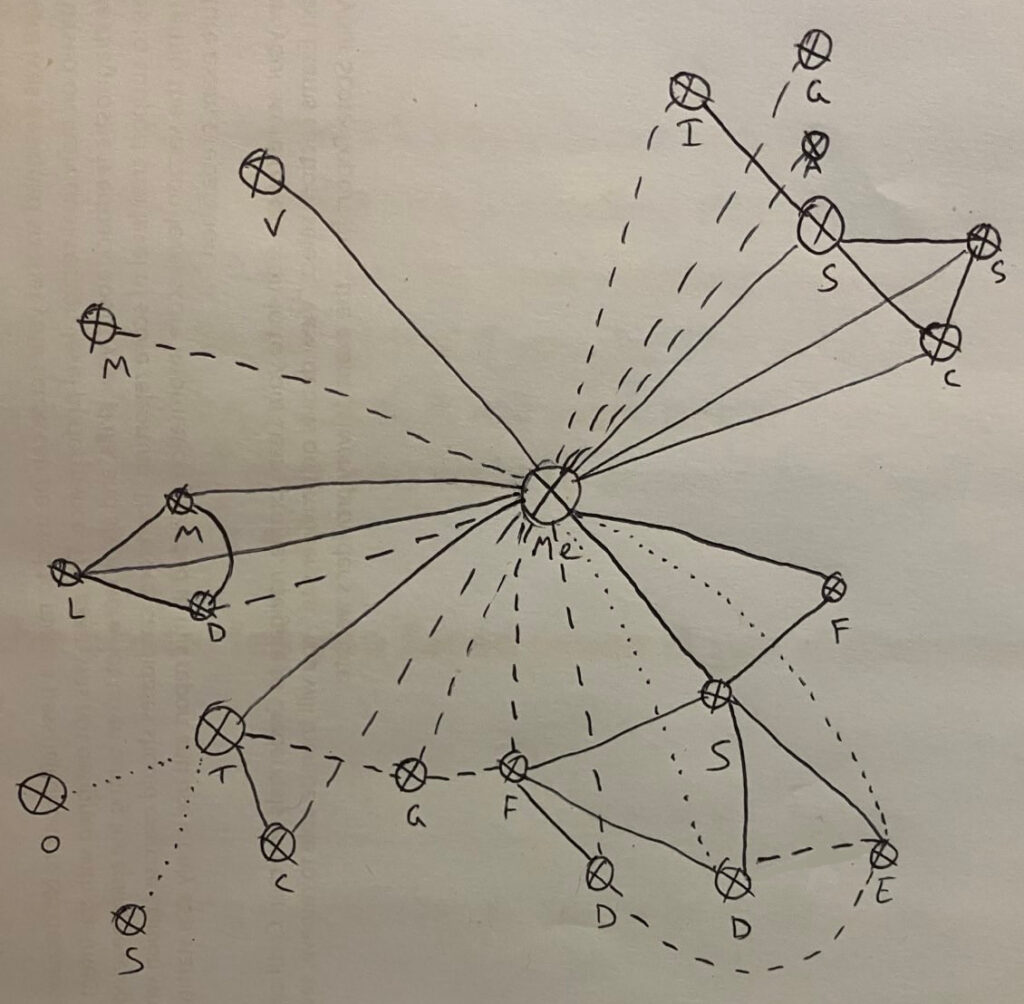

- User data should be controllable by the user. Similar to AT Protocol, this should include their unique preferences, friend list, and block list.

- Users can establish a list of preferred or mandatory data stores. This can be a data store under their personal control, or it might be a store administered by a trusted contact. By default, the network will attempt to store “posted” data to three separate stores for redundancy. Reducing the number of data stores below this number should trigger a warning to the user.

- Data stores have a configurable storage limit per user. Users cannot post into the network unless they have sufficient space remaining.

- Every user has a unique handle and public/private keypair. This is used to sign outbound posts into the network, asserting the user’s ownership. Public posts and media are only signed and otherwise cleartext. Posts may also be restricted to specific viewers by encrypting those posts and media to the receivers’ public keys.

- When data is transmitted into storage, the client also encrypts the data to the store’s public key. This is necessary to ensure that data store operators can scan for and remove illegal materials. All material is still signed with the owner’s keys, so it can be verified that a data store has not altered or tampered with the stored material–it should be either entirely present, or not at all.

- The first post into the network should be the user’s profile, including their public key and list of data stores.

- Existing posts can be edited, including for key rotation, by providing proof of knowledge of the previous private key used to post them.

- When posting into the network, clients may also send a short message indicating a new post was submitted from the user (and bound for “public” or alternately a list of specified handles), along with a unique ID for that post, to a relay server. Relays gossip amongst themselves to share updates which they know about. Posts which are not sent to a relay are quasi-“private”, but are still available if directly retrieved (though their access controls remain intact via the list of recipients for whom they are encrypted).

- Relay servers serve as an index of user profiles, and sender/receiver/post ID triplets.

This is conceptually similar to the AT Protocol, except with additional access controls to control post visibility, as well as adding redundancy for the network’s content. This protects against failure or unavailability of a personal data store.

A PDS installation should be configurable to allow whitelisted content (for private / friend group storage) or public content. Servers may allocate storage to users based on defaults or based on configured amounts linked to the handle / keypair. This may even allow users to establish commercial relationships with storage providers if desired (but never because it’s required–users can always run their own PDS, and can choose to have fewer than three storage instances if desired, though that reduces reliability).

Because posts and other material aren’t directly accessed by the relay servers, they should be relatively fast at serving the indexes described. Clients can perform a lookup of a user, a user’s posts, or posts destined for them, caching the data retrieved. Clients may display inbound posts by locating the PDS for the sending user and then retrieving the post content from any of the listed PDSs that can provide it. Clients may also be explicitly given a PDS link to retrieve, if a post is not announced to a relay. Clients posting content will attempt to directly store their content directly on all authoritative PDSs; if one is unavailable, an alert should be raised and the client should retry the storage operation later or request a functional PDS to perform a sync to the unavailable PDS later.

Locating a user’s profile will also grant access to the user’s public key, allowing posts to be directed toward that user (provided the receiving user has not instructed their client to block display of posts from the sending user). If the user has chosen to make this knowledge publicly available, their list of friends may also be posted, allowing others to traverse the network and find additional connections.

Key rotation is explicitly encouraged, as is data transfer. Servers should be prepared to locate all stored information by key and accept edits (including a new signature over the post) or export it to the client or a new PDS. Such commands or “system posts” are, like regular posts, signed with the handle’s known keypair to authenticate the command.

Relays not only gossip information about new posts, but (by default) about connections to other relays as well. This should allow a new relay to “bootstrap” with only a few connections to existing relays.

Groups may be created by a special “system post” which creates a new handle and keypair for multicast encryption. The creating user is registered as the “owner” of the group, which then creates a first post as the group’s “profile” as with a regular account, including a list of PDSs which the group will use. Group modifications, including to the list of owners and moderators, are approved by the group owner(s). Moderators are given “edit” access to the group’s posts. The group account is then instantiated on the PDSs as a clustered service, using appropriate primary / backup election methods to ensure that the group can respond to incoming input autonomously.

Group members may post to the group by sending a regular post destined only to the group; posts with a mix of group and regular recipients must be rejected. When the group account receives a post destined for it, the group account “wraps” the inbound post and rebroadcasts it using the group’s multicast encryption key. Moderators may delete the post if it is deemed inappropriate, which causes the group to blacklist that specific post ID (to prevent re-transmission). The posting user may also edit their message as normal, and the group should re-wrap and re-broadcast it as normal when the edit is detected. If the original user deletes their message, the group deletes its copy as well.

Because the group’s key is established for multicast encryption, users who have joined the group can instruct their clients to include posts from the group account, and messages from that group will be decrypted using multicast encryption rather than the user’s personal keypair.

It is anticipated that very popular groups may require their own set of private PDSs specifically to handle group content, particularly as this must be “re-broadcasted” under the group’s own account. This will, however, take the load of running the “group” account off any public PDSs, as well as alleviating the storage burden and possibly increasing performance.

A completely self-contained network can be created by initiating a relay with external connections disabled. If a client posts to its own private PDS, and notifies only the disconnected relay, then a social network of one user is the result. A second relay can be added by permitting external connections only to a specified target, and the self-contained network can grow from there.

I explicitly envision this being able to run within Tor as a (set of) hidden service(s). Though I’m sure the devil is in the details… it’s kind of a rough outline, I know.